Event Driven Design Patterns for Data Engineering

Event-driven designs for elegant real-time data processing.

Introduction

Event-driven architecture (EDA) is a software design paradigm that revolves around producing and consuming events to trigger processing. In this architecture, systems are built to respond to events in real-time or near real-time, allowing for more dynamic and responsive applications.

Data Engineers often find comfort in the simplicity of cron schedules and workflow orchestration tools like Airflow, Kestra, and Databricks Jobs. However, EDA too can be a powerful pattern in the data engineer toolkit.

Event-driven data engineer use cases

We’ll focus on design patterns in a sec, but first let’s recap a few use cases where EDA might come in handy:

File Processing: process or ingest files with an unpredictable file arrival schedule.

User Submissions: asynchronously process user submissions such as BI reports, security audits, or data deliveries.

Cyber Security: monitor and react to Endpoint Detection and Response (EDR) events to keep devices and environments secure.

IoT data processing: process and react to streams of data from IoT devices.

Healthcare Monitoring: can monitor patient data from various sources, providing alerts and insights to healthcare professionals for better patient care.

1. Change Data Capture (CDC)

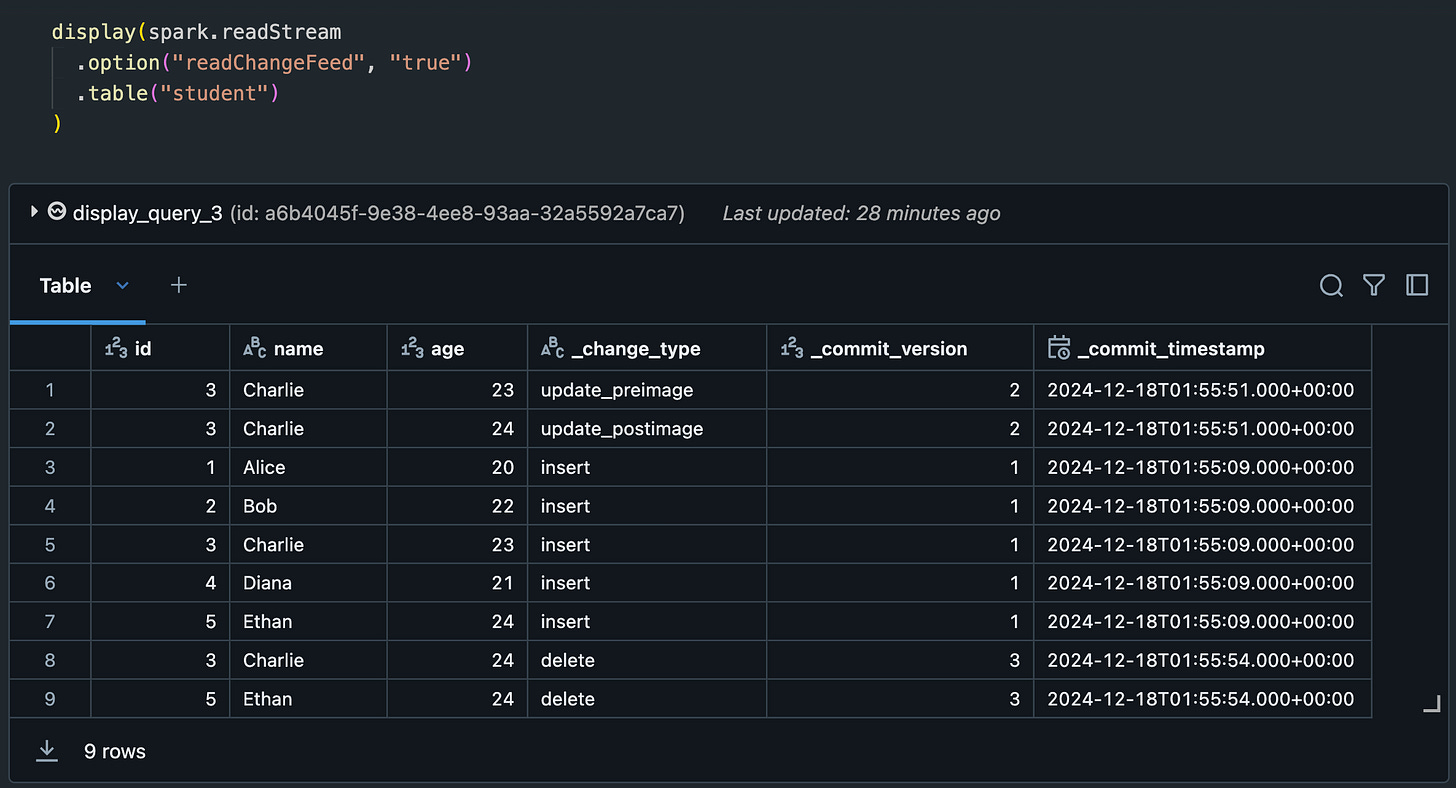

Change data capture, or CDC, is a method of capturing and processing all the inserts/changes/deletes to a table. If you’re using a data table format such as Delta Lake or Iceberg, you can simply enable CDC on the table and query the CDC feed.

CDC can be useful for several of the aforementioned use cases, with the exception of file processing, since the table itself can store any structured or semi-structured data.

2. Trigger on File Arrival

I am a huge fan of Databricks and have to make a plug for its File Arrival Triggers, which I have an entire separate blog post on here:

However, if you aren’t on Databricks, have no fear. You can also build this pattern with common cloud services. For example, in AWS you could use S3 + S3 Event Notifications + Lambda. Additionally, some orchestration tools like Kestra support S3 triggers natively.

Obviously this can be used to process any arbitrary file data as it lands in storage. Another great use case for this pattern is to take user submissions and generate a manifest file in S3 that describes the request, then use this pattern to trigger a data process against the submission. Some ideas for user submissions could be an intensive data analytics report, kicking off ad-hoc security audits, or a data resynchronization process for runbooks.

Note: if you are receiving a large number of files and very frequently, a solution using Databricks Auto-Loader would scale better.

3. SNS + SQS + Lambda

Another popular AWS recipe is Simple Notification Service (SNS), Simple Queue Service (SQS), and Lambda Functions.

At this point nearly all AWS services have some integrations with SNS, as do many 3rd party applications.

By integrating your SNS topic with an SQS queue, you will make the processing more scalable, durable, and performant as SQS provides the retry (re-drive) ability, batching, and optionally you can also set up a Dead Letter Queue (DLQ) for stale messages.

This design pattern is useful for so many use cases because SNS is so easy to integrate. Many monitoring tools like CloudWatch, Datadog, New Relic, Grafana, and others support SNS topics as a notification destination so you could create some alert processors for real-time operations. You could also use this for user activity and IoT events, ingesting it to a lakehouse table or OLTP database.

4. Streaming Services

Moving into more continuous data feeds? High volume, low latency? This is when scalable streaming services such as Kafka, Kinesis, or Apache Pulsar are a better choice.

Streaming tools like these excel at high-volume, continuous data that needs immediate processing. They also typically store data in a persistent manner, allowing you to replay events if needed.

Many technologies make it easy to either read or write to/from streams like Kinesis and Kafka; you could:

Trigger an AWS Lambda function directly

Read/write with Apache Spark

Read/write with Apache Flink

This would be appropriate for more demanding use cases such as high-volume streams of IoT data, auditing API usage or user activity, real-time anomaly detection, processing financial transactions, and more.

Cautions…

As with any design pattern, we should be mindful of its limitations. In EDA solutions, these limitations may not always exist but some things to be aware of are:

Event Ordering: does sequence matter to your system? Does your EDA design enforce order? E.g. if using SQS, you may need a FIFO queue, and with Kinesis pay attention to your

sequenceNumberDuplicates: can events be duplicated and is this ok? Tip: at times this can actually be a feature, akin to replaying data.

Observability: EDA systems usually have higher complexity; metrics and monitoring are important for observability. E.g. are you able to monitor if events fail to deliver, or are delayed? If it does fall behind, can you monitor the backlog needing to be processed?

Complexity: again, EDA can be more complex to monitor and troubleshoot issues with. Consider if you use case even demands EDA. E.g. if using for file arrival triggers, do your files arrive pretty consistently which could be aligned to a simple schedule? Remember, if all you have is a hammer… everything looks like a nail.

Conclusion

We have concluded this rundown of a few EDA design patterns for data engineering. These patterns make data ingestion/processing more real-time, potentially more cost-effective, and responsive.

I hope you have enjoyed this read. I would seriously love to hear feedback and other ideas for your design patterns!

Also, if you don’t mind supporting the MakeWithData blog (I promise it’s really just me, an individual guy that just loves to nerd out) please like this post and even consider pledging for future posts below!

MakeWithData is free today. But if you enjoyed this post, you can tell MakeWithData that their writing is valuable by pledging a future subscription. You won't be charged unless they enable payments.