Trigger Databricks Jobs on File Arrival

Use this new feature for event-driven Databricks Jobs to trigger when files arrive in your cloud storage. We'll look at 4 example use cases.

Databricks released the File Arrival Trigger feature for its Jobs/Workflows this year. Essentially, you point the job at a cloud storage location, such as S3, ADLS, or GCS, which triggers whenever new files arrive.

This is very useful for creating event-driven jobs. Previously, accomplishing this would have required gluing together a lot of cloud-specific tooling, such as AWS S3 combined with S3 Events combined with a Lambda function that finally invokes the Databricks SDK / API / CLI to run the job. So, I see this new feature as a great simplification and a very practical one.

Let's tour a few use cases where you may want to use file arrival triggers.

1. Irregular Schedule

The first use case is even called out in the aforementioned Databricks docs:

You can use this feature when a scheduled job might be inefficient because new data arrives on an irregular schedule.

Say you receive files on S3 from a data vendor that collects and provides cross-reference data for your industry. The vendor does not have a regular cron schedule for delivering new files. There is usually one file per day, anywhere from 1 pm to 6 pm UTC, but because they push the files manually, there sometimes isn't a file received on holidays or weekends if they forget to push it or have backup staff.

Naive Solution: Schedule a job to run every day from 1 p.m. to 6 p.m. (six runs per day). Write code in the job to look for new files and exit if none are found.

We have guaranteed at least five wasted runs every day. More on holidays and weekends when there are no files.

File Arrival Solution: use file arrival triggers. There are no wasted runs, and the code focuses on the business logic.

2. Batch Submissions

We now work for a FinTech company, and the data team works closely with the front-end team. The front-end team wants users to be able to request an export of all their transactions for a given date range, then send an email to the user with file attachments.

We can build a solution using file arrival triggers and a clever manifest file. We created the following design.

When a user fills out the submission form on the web app, the front end generates a JSON manifest file that encapsulates their input:

{

"userId": 123,

"emailRecipients": [

"john.doe@example.com"

],

"requestType": "EMAIL_REPORT",

"parameters": {

"dateRange": {

"start": "2024-01-01",

"end": "2024-09-01"

}

}

}This file is dropped into an S3 bucket, which triggers a Databricks Job. Our code processes the request and emails the user. This solution can easily extend with additional parameters and request types as the application evolves.

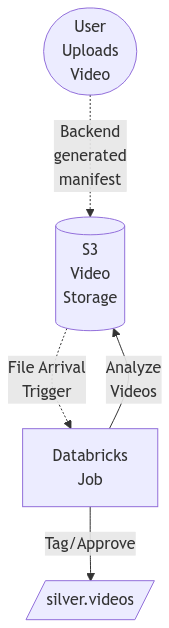

3. User Content Moderation

Similar to #1, we may receive irregular data when end users generate it. Working at a media hosting company, we have images and videos uploaded by users sporadically. We are required to moderate and tag the content for anything out of compliance.

File arrival triggers will allow you to create a job focusing on content moderation (e.g., illicit image detection, age restrictions, copyright violation, etc.) as new images/videos are uploaded.

For an end-to-end solution for content moderation, the job may ingest the unstructured data to a Delta Lake table and store additional columns, such as the auto-moderation status and tags.

4. CCPA Requests

The California Consumer Privacy Act (CCPA) provides consumers with rights, such as requesting that their data be removed from companies' products or deleted altogether.

Similar to #2, a good solution is to use file arrival triggers to process manifest files encapsulating the CCPA requests. We can create a Databricks Job to process the file/request, updating tables and/or deleting from them where rows match the requested email address or PII.

Bonus Topic: We can facilitate this even better by using table and column tags in Unity Catalog to tag data containing PII. The information can then be retrieved programmatically by querying the information_schema.

Conclusion

We barely scratched the surface of what's possible with File Arrival Triggers. I encourage you to try them out and hope Databricks continues these strategic technical features in Workflows.

I love seeing solutions across industries--let me know in the comments if you have another use case for file arrival triggers!