🍷FineWeb: the new Pile 🤔

What is FineWeb?

FineWeb is a large-scale web corpus dataset created by Hugging Face to train state-of-the-art Large Language Models (LLMs). Of course FineWeb is massive: it contains over 15 trillion tokens of cleaned and deduplicated English web data from the CommonCrawl project.

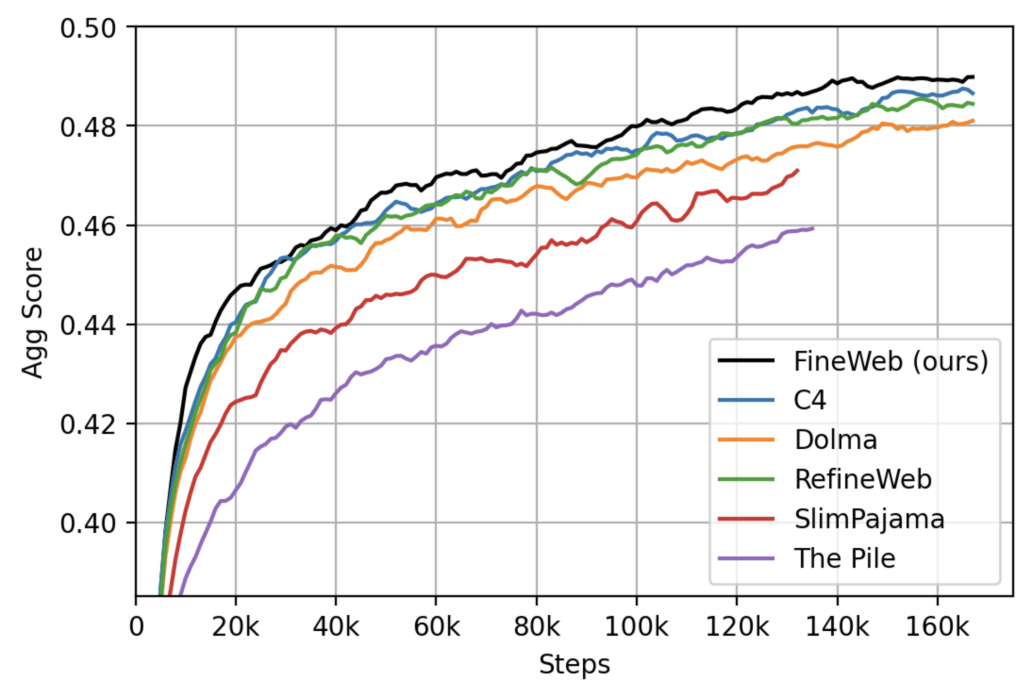

According to Hugging Face, their dataset “was originally meant to be a fully open replication of RefinedWeb, with a release of the full dataset under the ODC-By 1.0 license. However, by carefully adding additional filtering steps,[they] managed to push the performance of FineWeb well above that of the original RefinedWeb, and models trained on our dataset also outperform models trained on other commonly used high quality web datasets (like C4, Dolma-v1.6, The Pile, SlimPajama) on our aggregate group of benchmark tasks.”[1]

FineWeb vs. RefinedWeb vs. The Pile

Size and Scale: FineWeb contains over 15 trillion tokens and has a downloadable size of 45 TB, whereas RefinedWeb only contains about 5 trillion tokens / 1.68 TB, and The Pile with about 1.35 trillion tokens / 886 GB.

Data Cleaning: the team at Hugging Face focused on high quality web pages by using advanced filtering algorithms to remove spam, duplicate data, and low-quality pages.

PII Formatting is also used to anonymize email addresses and public IP addresses scraped from web pages.

Data Sources

So it comes from the Internet but how did HuggingFace obtain the data exactly?

Dynamic Web Scraping: FineWeb uses more sophisticated web crawling techniques that prioritize higher quality content such as authoritative sources, recent content, and filtering out harmful content.

Expert Vetting: the dataset was ran on the datatrove library and went through a process of expert vetting and analysis to determine the optimal deduplication approach, rather than just applying a single deduplication method across the entire dataset.

Community Feedback Loop: The creators at Hugging Face have indicated they intend to iteratively improve the dataset over time, through surveys, polls, open-ended discussions, and direct interactions

What does FineWeb contain?

Let’s take a look at the general makeup of FineWeb’s dataset.

The dataset is based on internet crawls between the Summer of 2013 and the Winter of 2024.[2]

It was created by processing and distilling 38,000 TB of CommonCrawl dumps into a 45 TB dataset ready for language model training. [2]

The dataset contains multiple configurations allowing you to load the default or a more specific dump/crawl such as CC-MAIN-2023-50. See here for the table of dumps/crawls you can selectively load.

Contains a variety of text from academic papers, books, news articles, blogs, forums, and more, aiming for a holistic representation of language use.

Also includes structured data types like tables and lists to train models on data extraction and interpretation tasks.

Use Cases & Applications

One of the things I love about FineWeb is the considerations taken to try to anonymize Personally Identifiable Information (PII) and avoid toxicity or biased content. We can certainly debate whether this is the dataset’s responsibility to solve. I like having the flexibility to know this data has received that treatment, and ultimately it gives the community another fantastic corpus to train with in the NLP and LLM applications.

FineWeb covers a very wide range of topics and styles, with high quality. One use case we may begin to see is FineWeb used to improve benchmarking and evaluation of language models, adding more comprehensive representation to existing benchmarks.

If you are considering FineWeb for coding tasks, please note the known limitation shared on the README:

As a consequence of some of the filtering steps applied, it is likely that code content is not prevalent in our dataset. If you are training a model that should also perform code tasks, we recommend you use FineWeb with a code dataset, such as The Stack v2.

I’m excited to see what new use cases and advancements this will bring to the GenAI community; in fact dozens of open source models have already been registered having been trained on FineWeb: https://huggingface.co/models?dataset=dataset:HuggingFaceFW/fineweb

The post 🍷FineWeb: the new Pile 🤔 appeared first on MakeWithData.