AWS Costs Saving Checklist: Quick and Easy Ways to Reduce Your Bills

It’s 2024 and every tech company is wanting to do more, with less, and that means reducing AWS costs. Review this simple checklist to find quick wins that save AWS costs on your next bill.

Also, with industries everywhere taking up GenAI initiatives to find the next way to add value and distinguish themselves in their market, the total cost of ownership for cloud infrastructure is becoming even more important.

1. Use gp3 EBS Volumes vs gp2

If you use EC2 instances at all, you’re definitely using EBS volumes as well, even if it’s just for the required root ( / ) volume. An often-overlooked saving is upgrading from gp2 to gp3 generation EBS volumes, mainly due to their pricing structure and performance flexibility.

Here’s why you should switch to gp3 if you haven’t already:

Lower costs per GB. Up to 20% lower, compared to gp2.

Generally more performant all around — offering the base performance of 3,000 IOPS and 125 MiB/s.

Flexibility to scale IOPS (Input/Output Operations Per Second) and Throughput independent of the storage capacity. This means scaling up to meet disk performance demands is more cost-effective than gp2, which requires provisioning larger volumes.

Don’t take my word for it, check out Amazon’s blog on gp2 vs. gp3 volumes here: https://aws.amazon.com/blogs/storage/migrate-your-amazon-ebs-volumes-from-gp2-to-gp3-and-save-up-to-20-on-costs/

2. Use VPC Endpoint for AWS S3 Costs

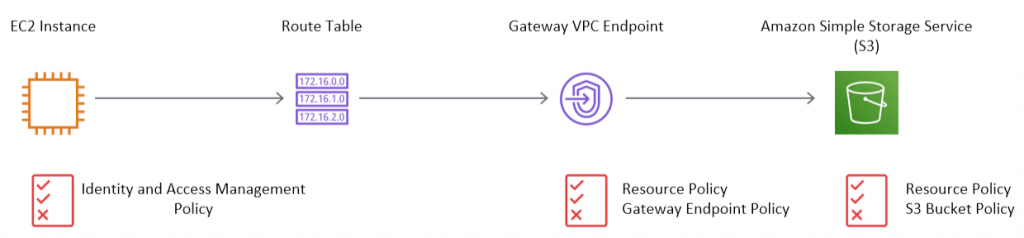

Do you know how your requests are reaching Amazon’s APIs when using S3? Ever wonder why your NAT costs are so high each month?

By default, network traffic to access Amazon S3 from a VPC is routed over public Internet. This typically uses a NAT (Network Address Translation) gateway, which is charged per GB processed. Your VPC is in AWS, and so is S3, so wouldn’t it nice if we could keep that traffic on AWS’s backbone… yes!

You can create a VPC Endpoint for S3, which requires zero additional configuration to your applications or workloads, and ensures S3 requests are routed directly from your VPC to S3. This direct connection can often reduce your NAT costs by 50–70%, depending on data volume and which region you use, but also improves network performance.

Again, don’t take my word for it, read Amazon’s blog and instructions here: https://aws.amazon.com/blogs/architecture/reduce-cost-and-increase-security-with-amazon-vpc-endpoints/

Note: you can create these endpoints for certain other AWS services, such as DynamoDB, Kinesis, Lambda and more, but S3 is the most beneficial for data-intensive organizations. Please also note the difference between “Gateway” and “Interface” type endpoints.

3. Watch out for S3 object versioning

Accidents happen — we’ve all been there when something gets accidentally deleted and panic ensues. That’s why we create backups and build BCDR (Business Continuity / Disaster Recovery) plans.

If you use S3, you may have given yourself added protection to accidental deletion by enabling S3 Versioning which keeps versions, or copies, of every object in a bucket and allows recovery to a previous version.

However, the risk you run with this feature is letting additional versions accumulate out of control, especially if applications overwrite the same objects frequently such as in data lakes and Lakehouse architectures. The extra versions of your objects are referred to as “noncurrent” objects because they’re not the active copy of the object. Add a lifecycle rule to your buckets to ensure “noncurrent” objects are expired after X days; you can make more complex lifecycle policies as well if you need to retain at least 1 noncurrent copy.

See the user guide for more information about deleting object versions: https://docs.aws.amazon.com/AmazonS3/latest/userguide/DeletingObjectVersions.html

4. S3 Lifecycle, Storage Classes, and Analytics

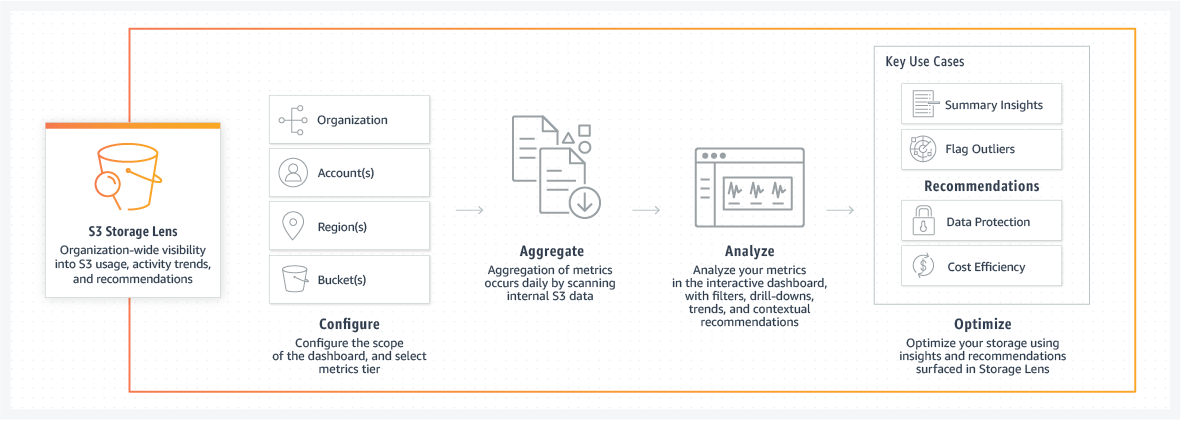

Amazon provides a few great ways to analyze the volume and trends for your S3 buckets: Amazon S3 Storage Analytics Insights which includes S3 Storage Lens and S3 Inventory features.

S3 Storage Lens is useful for identifying your largest buckets by object count, physical size, noncurrent versions, and how these metrics trend over time. For example, when you implement #3 from this checklist, use S3 Storage Lens to view the percentage of your S3 storage occupied by noncurrent versions. When you implement lifecycle rules, Lens is helpful to validate those changes (in addition to seeing 🤑 in Cost Explorer).

Another useful way to look at your data in Lens is by storage class. There are other lifecycle rules you may be able to define on S3 buckets to get the best price-to-performance, in addition to the default S3 Standard. For infrequently accessed data, S3-Infrequent Access (S3-IA) and S3 One Zone-IA are suitable classes, with One Zone being ideal when high availability isn’t necessary, leading to a cost reduction of up to 40%. For cold storage access very rarely, like backups and archives, use one of the S3 Glacier storage classes providing up to 95% in storage cost saving depending on data volume and specific Glacier tier.

If your S3 access patterns are predictable and designed with enough separation of buckets and/or path prefixes, you can save tons of money by defining lifecycle rules on buckets to transition objects from hotter storage classes (e.g. S3 Standard) to cooler storage classes when appropriate.

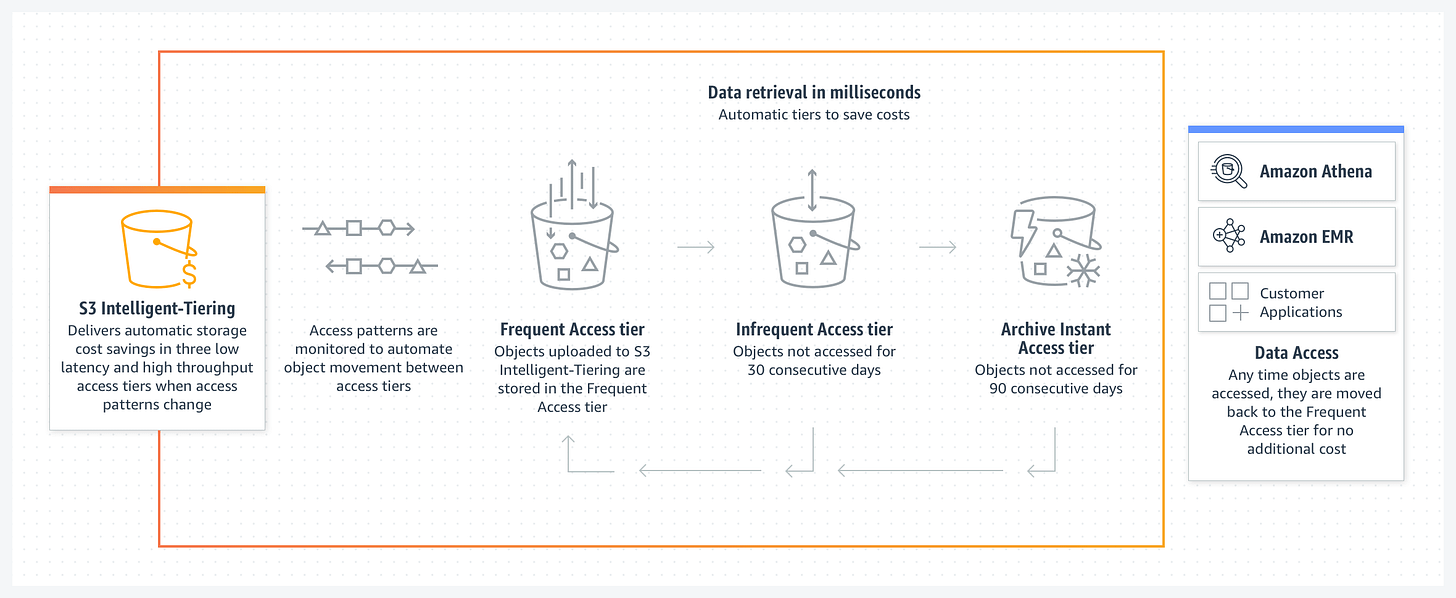

Alternatively, if your access patterns are less predictable or changing, you may benefit from S3 Intelligent-Tiering. With this feature, you pay a small monthly object monitoring and automation charge, in exchange for Amazon automatically monitoring access patterns and moving objects to a lower-cost access tier when they haven’t been accessed enough.

5. AWS Costs: Everything else …

There are honestly several ways to keep costs down, and I’d rather you walk away from this equipped with as many tactics as possible. So if you read this far, please accept this greater list of cost saving optimizations:

Use Spot Instances for low-impact workloads that don’t require high availability, and save up to 90% compared to On-Demand prices.

Use Savings Plans to save up to 72% on compute by committing to one- or three-year terms of usage.

Routinely check AWS Compute Optimizer for recommendations and right-size your instances to fit the workload.

Avoid using CloudWatch for custom metrics… you’re better off with open stacks like Prometheus + Grafana, or even a managed SaaS for observability.

Use Auto-Scaling Groups (ASGs) to reduce overpaying for idle resources.

Prefer Container based services like ECS or EKS over individual host/VM-based deployments. Containers make it easier to ensure full utilization of resources so you get what you’re paying for.

Add retention policies on your CloudWatch Log Groups. Don’t accidentally leave these with the default retention, Never Expiring!

Search for unused EBS volumes, or volumes in the “Available” state.

Consider putting a AWS CloudFront distribution in front of your S3 bucket to provide caching and reduce data transfer costs or cross-region movement.

Remember that DynamoDB has an Infrequent-Access table class, similar to S3 Infrequent-Access (S3-IA).

Before you go…

I hope you find these tips useful for keeping your AWS costs lean and it enables you or your organization to do even more!

Have a cost saving tip of your own that I missed? Leave a comment and I’ll try it out!

Follow this newsletter and my LinkedIn for more tech content, or on YouTube @MakeWithData!